What the APS must fix first to execute on the AI Plan 2025

The APS AI Plan sets a bold direction amid structural constraints. We unpack what must be fixed first for AI to deliver public value.

The APS AI Plan sets a bold direction amid structural constraints. We unpack what must be fixed first for AI to deliver public value.

The greatest risk to the APS AI Plan is not technology failure, but premature scale without organisational readiness.

Australia has no shortage of AI ambition, investment, or tools.

What remains uncertain is whether government can build the structural conditions required to apply AI safely, consistently, and at scale.

This gap between ambition and execution is already evident nationally.

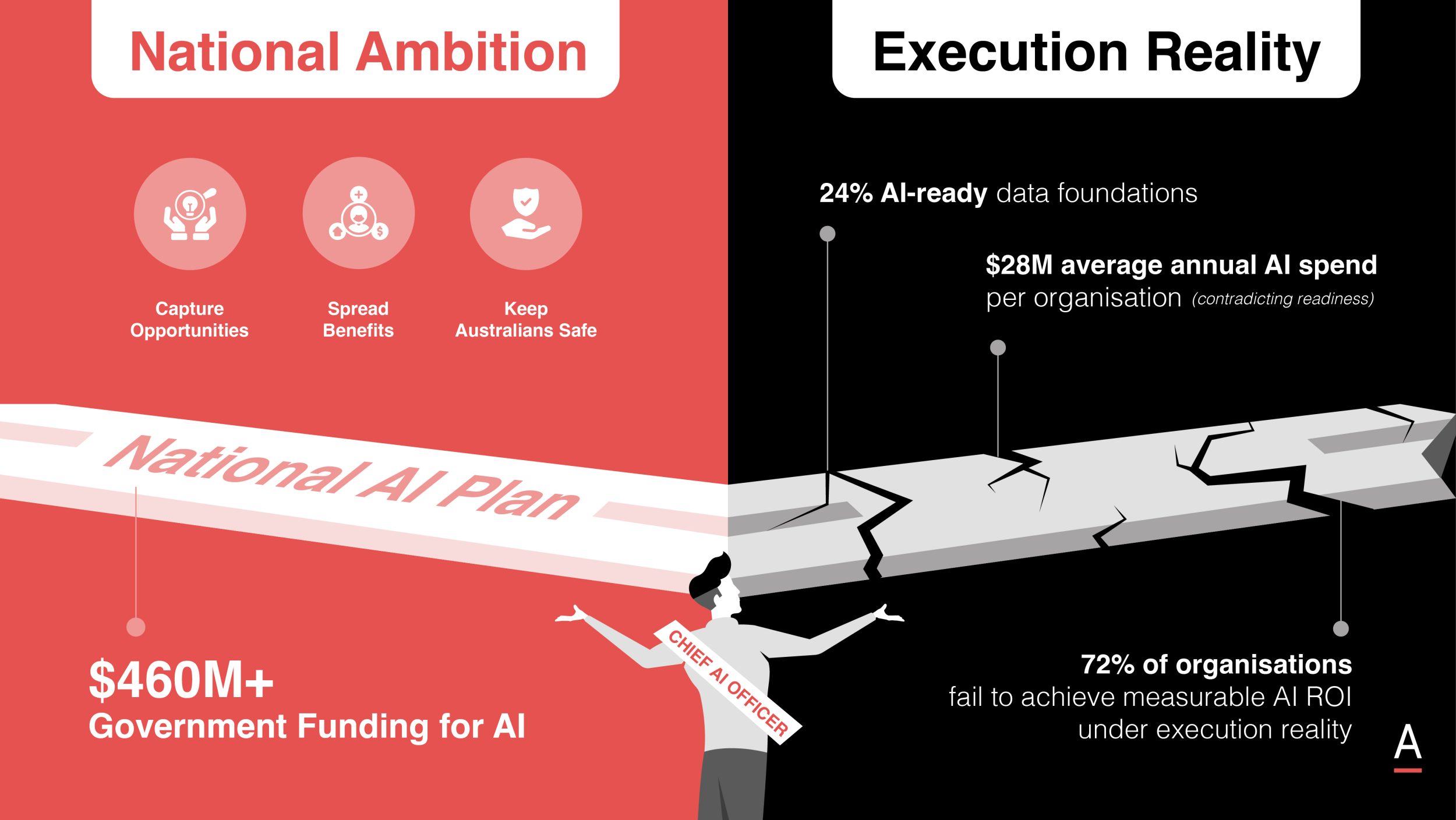

ADAPT research shows that 72% of Chief Data and Analytics Officers say AI has not yet met ROI expectations, despite rising spend and executive attention.

Australia’s National AI Plan sets the long-term ambition to capture economic opportunity, spread the benefits of AI across society, and keep Australians safe as new risks emerge.

The government is backing that ambition with more than $460 million committed to AI and related initiatives.

The AI Plan for the Australian Public Service is where this national ambition becomes operational reality, defining how AI is governed, deployed, and used inside government.

ADAPT’s latest insights show a widening gap between AI ambition and readiness across Australian organisations.

Investment is accelerating, yet data quality, governance maturity, and workforce capability continue to lag.

That gap is already visible inside government, where pilots proliferate but confidence in outputs, accountability, and scalability remains uneven.

Execution, not intent, will determine whether these plans succeed.

If the APS cannot operationalise AI effectively, it weakens Australia’s ability to deliver on the National AI Plan’s broader economic and social goals.

In practical terms, APS leaders must address four constraints in order, not in parallel:

- Establish clear enterprise data ownership and AI-ready architecture

- Centralise governance, risk management, and operational guardrails

- Build workforce capability and restore decision authority

- Scale platform-level AI use cases tied to priority service outcomes

Establish clear enterprise data ownership and AI-ready architecture

The APS AI Plan establishes Chief AI Officers as senior leaders accountable for responsible AI adoption across each agency.

Their mandate spans governance, reporting, integration with whole-of-government platforms such as GovAI, and alignment with policy and service delivery.

Their introduction addresses a structural problem that has existed for years. AI ownership inside government has been fragmented.

This fragmentation is already constraining outcomes.

While most agencies are increasing AI investment, many struggle to measure value or move beyond isolated use cases.

Architectural readiness compounds the issue.

Across Australian organisations, average annual spend on AI and data platforms now sits around $28 million, yet only 24% report data architectures capable of supporting AI at scale.

Nearly 70% describe their data as only partially integrated or fragmented.

Inside government, this manifests as limited confidence in model outputs, inconsistent validation, and heightened operational risk.

Agencies attempting early deployments report AI tools surfacing outdated information or struggling with incomplete metadata, even within controlled environments.

For public sector leaders, this creates a clear sequencing problem.

Until data ownership, architecture, and accountability are resolved at an enterprise level, expanding AI use will increase risk faster than it delivers value.

CAIOs cannot succeed by managing tools alone.

Their primary task is to align data, architecture, and ownership across CIOs, CDAOs, and business leaders.

Without this alignment, AI will remain a collection of local experiments rather than a reliable enterprise capability.

Centralise governance, risk management, and operational guardrails

The APS AI Plan’s Trust pillar sets strong expectations for ethical, transparent, and auditable AI use.

It aligns AI adoption with the Protective Security Policy Framework, the Information Security Manual, privacy requirements, and ONDC guidance, while anticipating broader access to generative tools across the Service.

Reporting on APS Copilot trials described how many public servants welcomed the productivity gains from AI assisted drafting and summarisation, although these benefits were accompanied by concerns about accuracy, unpredictable behaviour, and inconsistent quality.

The same reporting detailed instances where Copilot surfaced sensitive information or exposed documents beyond appropriate permission levels, signalling gaps in data storage, classification, and access controls.

Public concern remains heightened in the wake of Robodebt, where failures in automated decision making eroded confidence in how government applies technology to citizen facing processes.

The critical leadership decision here is speed versus safety.

Insights from ADAPT’s Government Edge reinforce the scale of the challenge.

Fewer than half of organisations report mature data security and risk management capabilities, even as most plan increased investment in Responsible AI and compliance.

Agencies that have progressed furthest have made a deliberate choice to slow expansion until governance is operational.

For instance, the Attorney-General’s Department paired AI access with mandatory training and a security-first operating model.

Defence Science and Technology Group developed internal language models within tightly governed environments rather than relying on external platforms.

These examples point to a clear prioritisation for public sector leaders.

Early centralisation of governance and guardrails must take precedence over agency-level autonomy.

Trust, once lost, cannot be iterated back in.

Build workforce capability and restore decision authority

The APS AI Plan places substantial emphasis on the People pillar, recognising that the capability of the workforce will determine how effectively AI can be embedded into policy, service delivery, and operational work.

The APS AI Plan commits to foundational AI training for every public servant and guidance for responsible use. This is necessary, but insufficient.

ADAPT’s national insights show AI literacy and data confidence remain uneven across workforces.

Where tools are deployed faster than capability, risk increases.

Staff may accelerate work without understanding how outputs are generated, validated, or escalated, particularly in policy and compliance contexts where decisions must be defensible.

Government Edge discussions highlighted how agencies are addressing this gap unevenly.

Services Australia has invested in cross-agency training and SAP capability development to support large-scale transformation while maintaining stability.

Home Affairs has broadened recruitment beyond Canberra and adopted hybrid models to attract scarce digital and AI skills.

Defence has deliberately rebuilt APS capability, restoring decision authority to public servants rather than outsourcing critical judgement.

The trade-off here is explicit. Access without capability increases risk. Leaders must treat workforce uplift as a prerequisite for AI scale, not a parallel initiative.

Scale platform-level AI use cases tied to priority service outcomes

The National AI Plan prioritises high-value use cases that lift productivity, competitiveness, and service outcomes across the economy.

Inside government, this translates to policy insight, forecasting, compliance detection, and citizen-facing services.

Yet most AI activity remains concentrated in low-risk convenience use cases such as document summarisation and administrative automation.

ADAPT data shows high-value strategic applications remain under-deployed, contributing to persistent challenges in demonstrating return on investment.

Government Edge leaders acknowledged this gap.

Agencies are running well-controlled pilots but struggle to scale due to unstable data foundations and fragmented governance.

Interest in advanced analytics and scenario modelling is high, but execution remains constrained.

The leadership choice is not whether to pilot more use cases.

It is which outcomes matter enough to justify platform-level investment.

Compliance detection, service forecasting, and complex triage should be prioritised over incremental productivity gains.

Pilots that cannot scale should be retired.