At ADAPT’s recent executive workshop, Australian senior leaders joined global AI governance expert Simon Kriss to unpack practical approaches for effective AI governance.

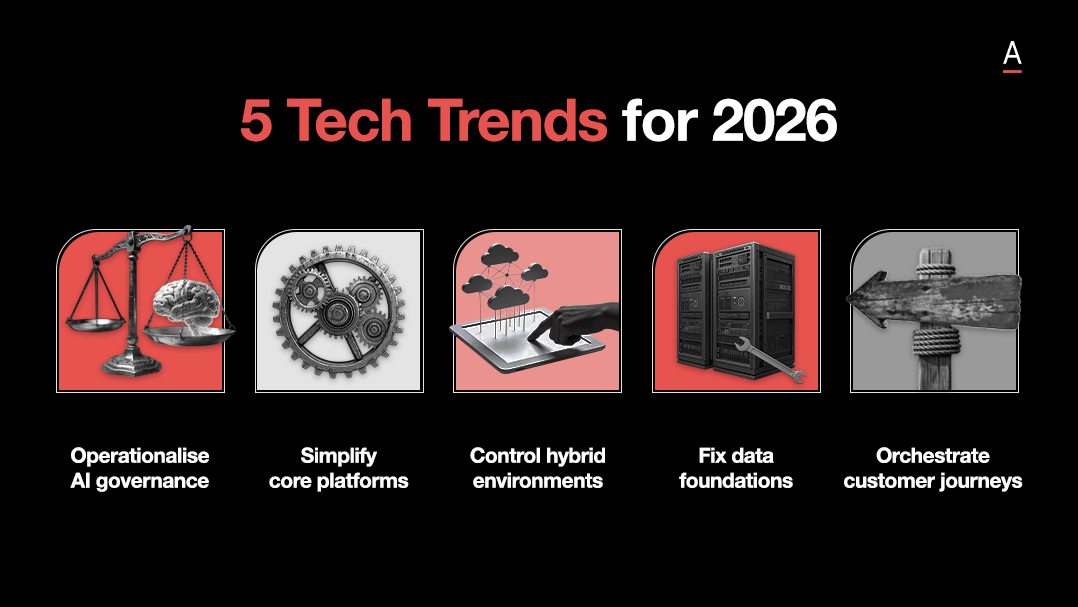

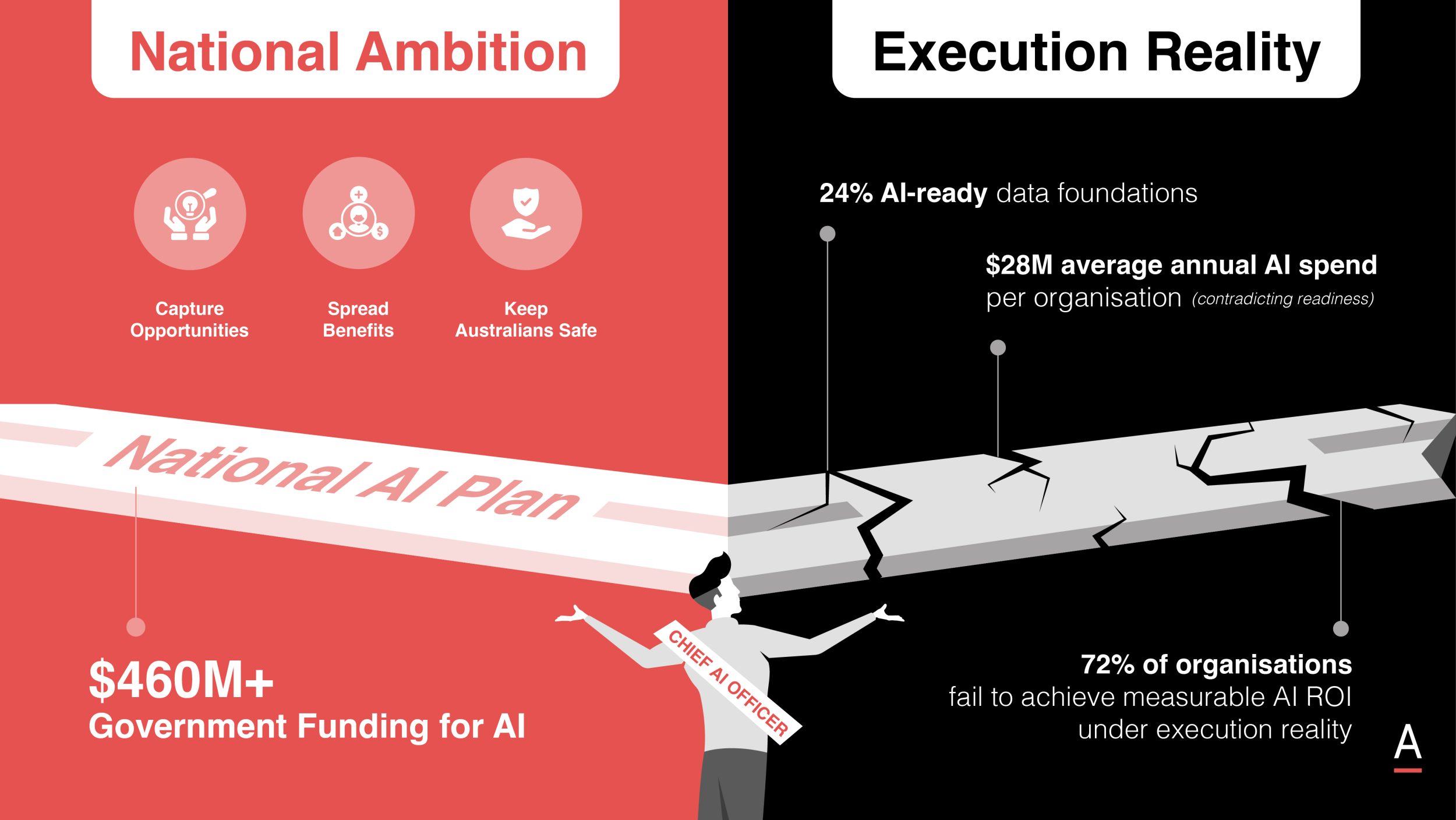

Organisations today see clear transformational opportunities from AI, yet many hesitate when moving beyond experimentation into full-scale production.

According to ADAPT’s recent Data & AI Edge survey, fewer than 50% of organisations have mature Data Security and Risk Management capabilities, while 70% of Chief Data and Analytics Officers (CDAOs) plan significant investments in Responsible AI, Governance, and Compliance within the next year.

The ambition to scale AI responsibly is clearly there, but turning that ambition into action remains a key challenge.

Simon Kriss, who serves as fractional Chief AI Officer to international firms and advises on ethical AI globally, including recent presentations to organisations such as the United Nations in Geneva, guided participants through proven ways to establish practical and effective AI governance.

As an ADAPT Advisor, Simon Kriss draws on decades of global AI leadership to help ANZ enterprises validate strategy and scale ethical, governed AI with real-world impact.

We outline the key insights and practical next steps from the workshop to help leaders translate AI ambition into effective governance and clear action.

Understand and define organisational risk clearly

Simon emphasised the necessity of first clearly assessing the organisation’s risk tolerance.

Organisations holding sensitive customer information or serving vulnerable populations require stronger governance measures.

Conversely, those using AI primarily for internal efficiency or straightforward processes can start with more streamlined governance structures.

Leaders agreed that having clear, upfront conversations about risk accelerates governance design.

Organisations should therefore initiate internal reviews to define their risk appetite precisely, enabling quicker, more confident decision-making in AI projects.

Establish firm ethical guidelines early

Simon explained that organisations often stall because they lack a clear ethical baseline.

Leaders should proactively document ethical standards to determine appropriate uses of AI, especially in critical areas such as employee evaluation or sensitive customer interactions.

Establishing clear ethical boundaries upfront reduces uncertainty, allowing organisations to proceed confidently with implementation.

Ethical clarity significantly enhances governance effectiveness and prevents disruption during AI deployment.

Adopt simple governance models tailored to risk

Participants explored how governance frameworks can become overly complex and slow down innovation.

Simon recommended creating a straightforward governance matrix that aligns AI use-cases with data sensitivity and risk level.

For example, simple internal applications involving publicly available information may only require minimal governance oversight, whereas high-risk applications using personal data need direct board involvement.

Leaders appreciated this method’s simplicity, recognising it could enhance their governance agility without sacrificing control.

Simple, scalable governance structures help organisations implement responsible AI swiftly.

Clearly designate governance ownership within the business

One of the workshop’s main discussions revolved around AI ownership.

Simon observed that effective governance usually emerges when a senior business leader takes ownership, rather than relying solely on technology or data departments.

Business-driven governance ensures alignment with organisational strategy and smoother operational execution.

Organisations should therefore identify senior executives capable of bridging technical and strategic business needs to lead governance initiatives.

This strengthens organisational alignment and accelerates successful AI deployment.

Actively manage vendor accountability

Simon highlighted growing concerns about third-party AI services.

Organisations must ensure external suppliers adhere to their internal governance standards, including transparent data use, ethical practices, and clear model training processes.

Effective vendor management mitigates risk, ensures compliance, and reinforces responsible AI use.

A critical takeaway is that organisations must maintain rigorous oversight of vendor governance practices to strengthen their overall risk posture and maintain compliance.

Position governance strategically as an enabler

Finally, Simon reinforced that effective governance frameworks should be seen as strategic advantages rather than bureaucratic barriers.

Organisations that successfully integrate governance with their strategic priorities build greater trust with customers and stakeholders, positioning themselves as innovative, responsible market leaders.

Leaders left the workshop understanding governance as an accelerator of AI adoption, rather than an obstacle.

Embracing governance as a strategic enabler fosters deeper internal alignment and increases external credibility.

The next steps to practical AI governance

Organisations aiming to lead AI must first define clear ethical standards, establish precise risk guidelines, appoint senior business leaders to champion initiatives, and actively manage vendor compliance.

Positioning these controls strategically will ensure alignment with organisational goals and build stakeholder trust.

Those who act decisively today will achieve greater innovation and market leadership.

Simon Kriss recently spoke at Data & AI Edge about how shifting board mindsets is essential to embedding responsible AI governance.

He underscored that ASIC now expects directors to be as well‑versed in AI as they are in cyber security, yet many boards remain functionally illiterate, often jumping ahead to “how” without first asking “why”.

Watch his keynote presentation to see how you can win board support and safely accelerate AI innovation.