Why Australia’s AI ambitions will fail without a radical infrastructure rethink

Australia’s AI growth depends on unified cloud, cost control, security, and infrastructure built for density, sovereignty, and scale.

Australia’s AI growth depends on unified cloud, cost control, security, and infrastructure built for density, sovereignty, and scale.

The nation’s AI trajectory is running up against an infrastructure reality.

Cloud, data centre, and edge strategies have expanded, but the pace of AI adoption is revealing structural weaknesses.

Workload placement decisions are shifting rapidly, compute demand is surging, and leaders face new trade-offs between cost, sovereignty, and performance.

Unless these environments are unified and optimised for AI’s requirements, the result will be slower delivery, higher costs, and missed opportunities.

Reason 1: Fragmented cloud, data centre, and edge strategies will stall AI delivery

Hybrid strategies have grown more complex.

ADAPT research shows hybrid cloud adoption has quadrupled in the past year to 21%, yet only 30% of workloads remain in public cloud, down from 46% the year before.

1 in 4 organisations is actively repatriating workloads, a sign that current designs are not delivering the flexibility and performance AI demands.

Without cohesive integration, hybrid will become a bottleneck, not an enabler.

Asif Gill, Head of Discipline Software Engineering at the University of Technology Sydney, describes the future as “hybrid and federated” where workloads span public cloud, on-premises, and sector-specific AI clouds.

He argues for intentional architecture and observability, including digital twins of the enterprise to give leaders a satellite-level view of workloads, data flows, and GPU clusters for precise planning.

Failing to gain this level of visibility will leave leaders blind to capacity constraints and unable to place AI workloads where they run best.

Brendan Humphreys, Chief Technology Officer at Canva, adds that AI’s demands on infrastructure mean integration between platforms must be frictionless, with clear orchestration between data pipelines and the environments they run on to avoid service degradation.

Where orchestration lags, AI model performance will degrade and service uptime will suffer.

Edge capabilities are a critical part of this mix.

John Hopping, Chief Technology Officer APAC at Ericsson Enterprise Wireless, points to low-latency AI applications that cannot rely solely on centralised processing.

Local compute at the edge keeps systems operational when network conditions fluctuate, while SD-WAN and SASE ensure performance and security across dispersed locations.

Adam Gardner, Head of Edge at NEXTDC, expands on this by noting that edge deployment strategies must be tailored to specific workload needs, balancing proximity with the economies of scale offered by central facilities.

Ignoring edge in AI design will leave latency-sensitive workloads exposed to outages and compliance breaches.

Network backbone design is also evolving.

Binh Lam, Senior Director and Head of Enterprise Internet Services at NTT, is advancing photonic optical networking to replace traditional electronic processing, achieving ultra-low latency, massive throughput, and lower energy use, already proven in submarine cable production.

Nam Je Cho, Director of Solutions Architecture at AWS, reinforces that without high-capacity, low-latency connectivity, AI training and inference pipelines stall, making even the best AI architectures ineffective in production.

Reason 2: Lack of cost control and security will drain budgets and expose AI operations

As AI scales, so does the cost pressure.

Wayne Vest, Senior Expert at McKinsey & Company, observes that CIOs are now expected to fund AI adoption from within existing budgets, making 20–40% cost optimisation a necessity.

Almost half of current technology spend is tied to infrastructure and cloud, and FinOps maturity has become a differentiator, moving beyond turning off unused resources to linking costs directly to product outcomes.

Matt Boon, Senior Research Director at ADAPT, notes that cost discipline is as much about cultural change as technology, requiring shared accountability between engineering, finance, and operations.

Without a financial model that keeps AI costs transparent and sustainable, projects will stall at pilot stage or be cut entirely.

The latest ADAPT data shows 63% of IT leaders plan to in-source identity and access management, signalling a preference for tighter control over sensitive data flows in AI pipelines.

Edwin Kwan, Head of Product Security at Domain Group, warns that AI datasets and models expand the attack surface, requiring zero-trust identity systems and rigorous governance.

Matt Preswick, Principal Solutions Engineer at Wiz, adds that security for AI workloads cannot be an afterthought; it must be embedded in provisioning and deployment processes to reduce vulnerabilities created by rapid iteration.

Without this security foundation, breaches will not only halt AI services but also undermine stakeholder trust in the technology.

Infrastructure resilience is just as important.

Lam’s globally distributed DDoS mitigation detects and neutralises attacks near their source, shielding Australian networks from high-volume events that could disrupt AI services.

Peter Alexander, Chief Technology Officer at the Department of Defence, points out that resilience planning must now include scenarios where AI workloads themselves are targeted, demanding a combination of redundancy, rapid failover, and secure-by-design architectures.

Raj Singh, Targeted Segments Enterprise Sales Leader at Schneider Electric, links resilience and cost control to sustainability goals, arguing that power-efficient data centre design both reduces operational costs and strengthens uptime by mitigating thermal and energy-related risks.

Failure to plan for resilience in AI operations will lead to costly outages and compliance exposure.

Reason 3: Infrastructure unprepared for AI density, sovereignty, and decentralisation will limit scale

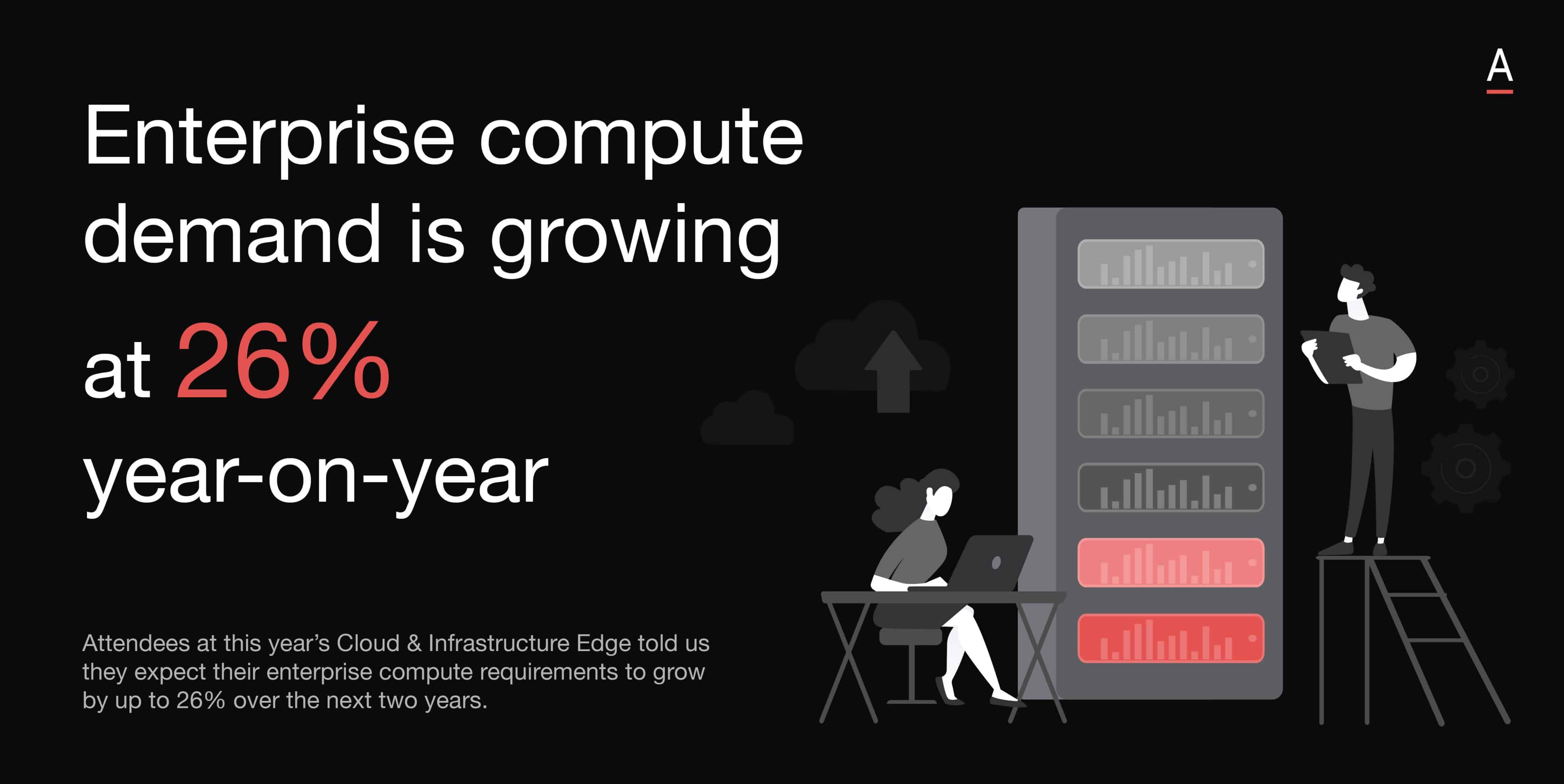

Enterprise compute demand is growing 26% year-on-year, but many facilities are not yet ready for AI’s density requirements.

Bevan Slattery, founder of 5 ASX companies including NEXTDC and Megaport, highlights the need for higher rack power, liquid cooling, and interconnection that brings GPU clusters closer to both cloud on-ramps and enterprise edge nodes.

Without this infrastructure shift, AI performance will be throttled by power limits, cooling failures, and network bottlenecks.

For organisations with narrow margins, investment choices must be deliberate.

Tom Quinn, General Manager Core IT at Metcash, prioritises reducing risk, particularly in cyber resilience, before upgrading or replacing systems.

This ensures AI workloads can be supported without destabilising existing operations.

Gabby Fredkin, Head of Analytics and Insights at ADAPT, points to the importance of workload placement data in these decisions, enabling leaders to model where AI training, inference, and data storage will be most efficient.

Leaders who cannot model this accurately will over-invest in the wrong capacity or underinvest in critical areas.

Sovereignty is another defining factor.

Gill proposes sector-specific AI clouds to keep sensitive research and operational data within national jurisdiction while enabling shared GPU resources for efficiency.

Matt Gurr, Senior Director of Design Management APAC at NTT, adds that sovereignty-by-design requires not just local hosting but also compliance-aware network architecture to prevent inadvertent data movement across borders.

Neglecting sovereignty will restrict AI use in regulated sectors and limit its role in mission-critical applications.

Recommended actions for cloud & infrastructure leaders

Translating these risks into action requires deliberate planning and investment.

The following priorities will help leaders close the infrastructure gaps that threaten to slow or derail Australia’s AI ambitions.

Unify cloud, data centre, and edge to outpace hybrid complexity

- Create a cohesive integration strategy for public cloud, private cloud, and edge resources.

- Invest in observability tools such as enterprise digital twins to guide workload placement.

- Modernise network backbone capacity with ultra-low latency connectivity to support AI pipelines.

Embed cost control and security to sustain AI velocity

- Mature FinOps capabilities to align AI costs with business outcomes.

- In-source identity and access management to safeguard AI pipelines.

- Build resilience through secure-by-design architecture and distributed threat mitigation.

Design for AI density, sovereignty, and decentralisation

- Upgrade facilities for higher rack power, advanced cooling, and GPU interconnection.

- Use workload placement modelling to balance performance, risk, and cost.

- Implement sovereignty-by-design to keep regulated data compliant while enabling scale.

Australia’s AI ambitions will succeed only if cloud, data centre, and edge environments are integrated into a cohesive operational model, cost and security disciplines are embedded from the outset, and infrastructure is designed for AI density, sovereignty, and decentralisation.

Without this radical rethink, organisations will face slower AI delivery, spiralling costs, mounting security risks, and competitive decline.