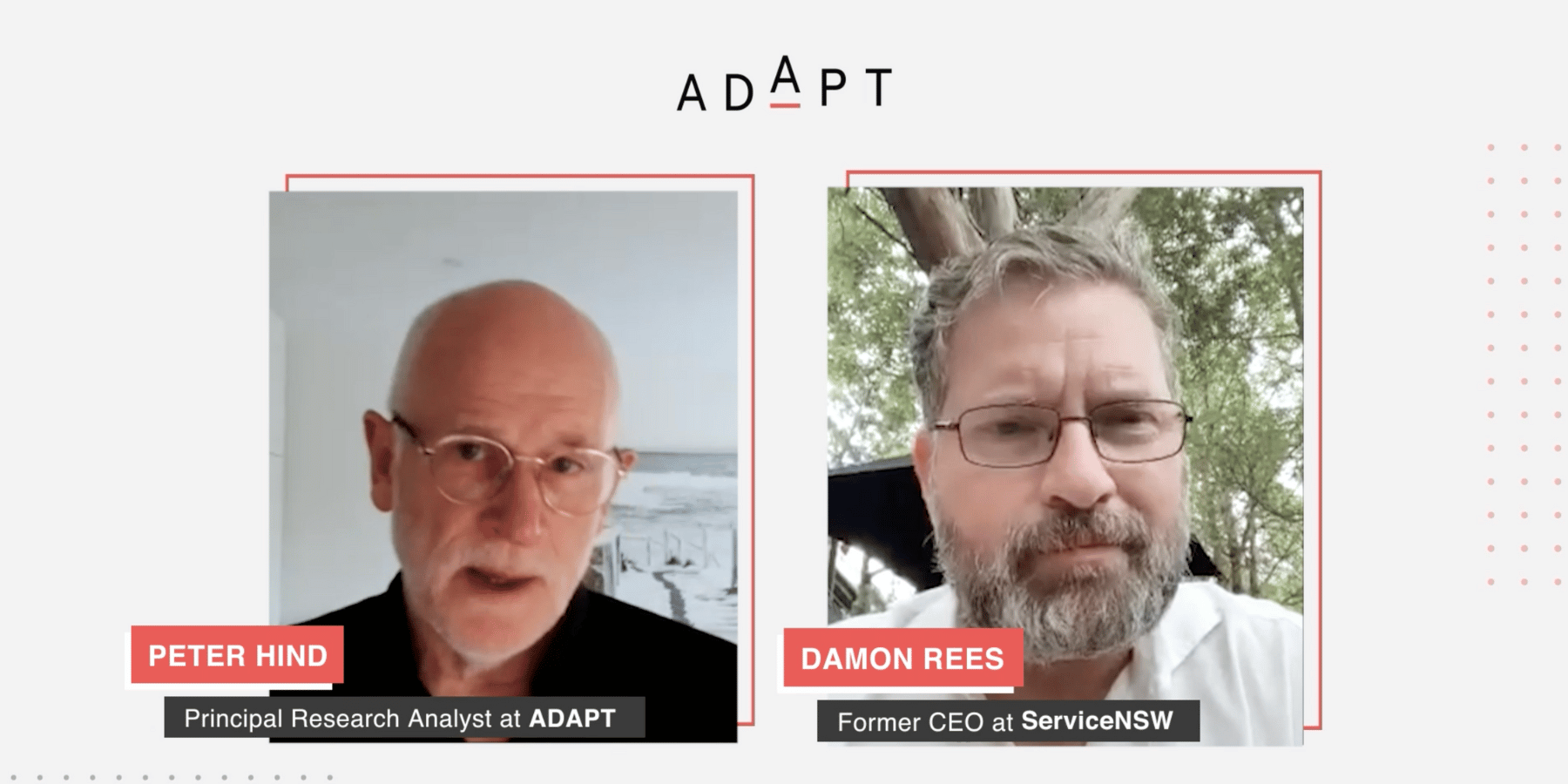

Milan Korbel is currently a Partner in McKinsey’s office in Melbourne leading the manufacturing analytics practice in Australia and globally for Quantum Black. At ADAPT’s CFO Edge, he spoke to ADAPT Senior Analyst Peter Hind about being prepared to evolve AI to complement your employees’ work.

Peter Hind:

We’re going to talk Artificial intelligence. You work for QuantumBlack within McKinsey. You, obviously the organisation, see the potential for artificial intelligence. Do you think artificial intelligence is still embryonic, or do you think we’re now accepting it in our work environments?

Milan Korbel:

Well, that’s a good question. I think the technology itself is still in development. It’s matured in some situations as an application, but as a technology, it’s still under development. It’s just not working perfectly well at points, you still have to keep nudging it until it gets there. So, and in terms of adaptation in the business, I think most of the business, it’s still not adapting it fully. There is quite a lot of pilots. I mean, several pilots across the organisation, but only few organisations that get to that level of transformation and transform their businesses, their functions, vertically, horizontally, just a few of them.

Peter Hind:

And you were telling me, when we spoke beforehand, Milan, that a lot of people look at artificial intelligence and expect it to be a complete solution from the outset, where it’s something, really, you need to be prepared to evolve it. Could you explain a bit more here about your thinking?

Milan Korbel:

That’s a good question, again, because that’s what we hear. People feel it is an off-the-shelf solution, plug and play?

I think, to some application, which is deterministic, it might be, still, it’s dangerous, but I can’t explain why, but usually, 90% of the application, 95%, I don’t know the number, but it’s just kind of making it up, but the majority of the applications is development in process. So you start with the technology, so the data, the technology, the algorithms, but you have to keep giving it away. You have to keep testing it, iterating on that gives you an answer, the answer is mostly wrong, so you kind of try to restrain it again until you really narrow the space where the artificial intelligence for that problem performs better. But if you take that and apply it to somewhere else, it will fail, right? So it’s really, we’re speaking about the problem-centric AI, not the general AI.

Peter Hind:

What you’re talking about there, to me, Milan, it’s something an organisation has to be willing to grow with it, rather than just take it as something out of their toolbox. This is something where you want to be getting better and better at its application.

Milan Korbel:

Absolutely, well, look, for me, maybe I see it too simply, but artificial intelligence can solve problems much faster than humans. I feel humans have a big role to define the problem, but then hey, let’s forget it, about us solving it. We have now tools for it.

So we have to find this a collaboration between AI and start really accepting it, because it’s smarter than us when developed right.”

But it’s not going to define the problems for us, necessarily. Do you know what I mean?

Peter Hind:

No, no, and I think that’s an important thing because what I’m hearing you say is, the world is very dynamic, fast-paced, moving. We haven’t got the time or luxury to sit and analyse it, as humans. We need machines to help us get at answers quickly to the problems that we face. But people have got to trust technology. They’ve got to feel that it’s something that they, it’s not there to kill them, it’s something there that complements them.

Milan Korbel:

That’s exactly that. So it’s like, basically, you have to start learning it from the basics, so you develop maybe a simple model. And then you keep it, evolving it, so it’s like when you learn how to bike, you have those support wheels, and you keep helping and learning and then you let it go. But it takes time, it takes time in terms of understanding what it does.

It should not be Black Box, but once you understand the uses, what it does and why it does it, then you start really adopting and accepting it and working with it much closer.”

Like, the example I had about optimising, well, it’s not really optimising, it’s using AI to optimise a refinery. So, it’s looking at, as process integration, it’s looking at the whole process itself, and because it’s process integration, so it understands the connections between different areas and can recommend set points for a refinery, for example. And if you are a human, you work usually across different areas, you’re disconnected. As the human mind what can have five, six variables, and decide on them, this can do thousands. So yes, but you have to grow into it. If you would just put it in there, people would not trust it, you have to grow into it. You have to start developing it from simple to more complex.

Peter Hind:

Okay, I think that implies the change of management tasks to show humans that you want them to be casting an eye over this technology. You want them to be putting sanity checks on what it gives you, that type of stuff. So you want to make it better.

Milan Korbel:

Absolutely. That’s exactly it, that’s a must. The artificial intelligence will always, always in my experience, recommend some nonsense. Unless the problem is very simple, then it kind of gets it right very quickly, and when the problem is really complex, it starts recommending and testing different directions and very often, those millions of directions are wrong. And then it finds the right one, and it starts exploiting the right direction. See what I mean? And you have to keep nudging it in the right direction. And that’s where the humans come into play.

Peter Hind:

So what I’m hearing you say is, to make a success of AI, people have got, you’ve got to really engage your staff by seeing how it complements their work and how they can… Give them insights, and maybe free them of some drudgery in their jobs, and the monotony of their jobs.

Milan Korbel:

Absolutely. As I say, I would say that two blocks from humans. It’s mostly one is superior, they’re thinking that they’re superior to the answer, so they can do better, “because I have been working here for 50 years “and “I have been doing the forecast for 50 years.” And second is the fear of losing the job. And I think that’s where we have to educate.

It’s also the government that has to educate the public that AI is not necessarily there to replace you but to support, and develop, and produce new jobs.”

Peter Hind:

The other thing I think I picked up from our discussion beforehand is, we talk about forecasting, and AI, and predictive analysis, and we always seem to imply financial outcomes, but actually, there are things that we need to do with, when do we need to maintain an expensive piece of equipment? What’s the weather forecast for the planting of crops? Commodity prices, if you want to produce some steel or something like that. All of those things become integral to a smarter organisation.

Milan Korbel:

Absolutely, the bottom line is usually driven by value or by user experience.

If you want to make people happy or help them with their job, eliminate the burden. That’s where AI can help a lot.”

So, enhance the environment, the working environment, or the value, but there might be others, health, safety, and other aspects, but this is what I see the most, it’s driven by value and by user experience as applications.

Peter Hind:

Okay, Milan, thank you for your time.

Milan Korbel:

Thank you.

WATCH

08:05

WATCH

08:05